#Mainframe Modernization Services

Explore tagged Tumblr posts

Text

Your Trusted Partner in Mainframe Modernization: Vrnexgen

Vrnexgen is your trusted partner in mainframe modernization, offering seamless migration, integration, and transformation of legacy systems. With deep expertise, innovative tools, and a customer-first approach, we ensure a secure, scalable, and future-ready digital enterprise journey.

0 notes

Text

Modernizing Mainframes: Unleashing the Power of Digital Cloud Services

Introduction

In the era of digital transformation, where agility and scalability are paramount, mainframe systems, although venerable, can become significant roadblocks for organizations seeking to innovate and stay competitive. Modernizing mainframes through the adoption of digital cloud services has emerged as a strategic imperative. In this comprehensive guide, we'll delve into the world of mainframe modernization, exploring its significance, challenges, benefits, and best practices.

The Significance of Mainframe Modernization

Mainframe systems have been the backbone of many organizations for decades, providing stability and reliability. However, these legacy systems come with inherent limitations, such as high maintenance costs, rigidity, and difficulty in integrating with modern technologies. Mainframe modernization is the process of transitioning from these monolithic systems to agile, cloud-based solutions, and its significance lies in the transformative potential it offers:

Agility and Scalability: Cloud-based systems provide the agility and scalability required to adapt to rapidly changing business environments and accommodate growth seamlessly.

Cost Optimization: Modernizing mainframes can lead to substantial cost savings by reducing the need for expensive hardware and lowering maintenance costs.

Enhanced Performance: Cloud services are designed for high performance, offering faster data processing and reducing downtime, resulting in improved operational efficiency.

Flexibility: Cloud environments enable organizations to adopt a flexible, pay-as-you-go approach to resource allocation, eliminating the need for costly upfront investments.

Innovation: Mainframe modernization opens the door to harnessing advanced technologies such as artificial intelligence (AI), machine learning (ML), and the Internet of Things (IoT) to drive innovation.

Global Reach: Cloud services provide global accessibility, allowing organizations to serve a broader customer base without the need for physical data centers in multiple locations.

Benefits of Mainframe Modernization

The benefits of modernizing mainframes through digital cloud services are multifaceted and can drive transformational change within an organization. Let's explore these advantages in detail:

Improved Efficiency: Modernized systems can automate manual processes, streamline operations, and reduce the need for manual interventions, resulting in increased efficiency.

Cost Reduction: Cloud services often lead to cost savings by eliminating the need for substantial capital investments in hardware and reducing ongoing operational expenses.

Scalability: Cloud environments provide the ability to scale resources up or down based on demand, ensuring optimal performance and cost-efficiency.

Enhanced Security: Leading cloud providers invest heavily in security measures, offering robust protection against cyber threats and vulnerabilities.

Business Continuity: Cloud-based disaster recovery and backup solutions ensure data integrity and availability even in the face of unforeseen events.

Agility and Innovation: Modernized systems can quickly adopt and integrate new technologies, fostering innovation and adaptability.

Challenges in Mainframe Modernization

While the benefits of mainframe modernization are compelling, organizations must be prepared to navigate the challenges that come with this transformation:

Legacy Integration: Migrating away from mainframes often involves complex integration with existing systems, which can be time-consuming and challenging.

Data Migration: Transferring large volumes of data from mainframes to the cloud can be cumbersome and requires meticulous planning to ensure data integrity and security.

Security Concerns: While cloud providers offer robust security measures, organizations must also take responsibility for securing their data and applications in the cloud.

Change Management: Employees may resist changes to their familiar workflows and systems. Effective change management strategies are crucial to a successful migration.

Vendor Lock-In: Choosing the right cloud provider is essential, as vendor lock-in can limit flexibility and increase costs in the long run.

Best Practices for Mainframe Modernization

To overcome these challenges and ensure a successful mainframe modernization, organizations should adhere to best practices:

Comprehensive Assessment: Begin with a thorough assessment of your current mainframe environment to understand its intricacies and dependencies.

Clear Goals and Prioritization: Define clear modernization goals and prioritize workloads based on business value, complexity, and readiness for migration.

Data Management Strategy: Develop a robust data migration strategy that includes data cleansing, validation, and migration testing to minimize risks and maintain data integrity.

Security First: Implement stringent security measures, including encryption, access controls, and regular compliance audits, to protect data in transit and at rest.

Change Management: Invest in change management programs to prepare employees for the transition and ensure they can effectively utilize modernized systems.

Cost Optimization: Continuously monitor and optimize cloud costs to prevent overspending and ensure that resources are used efficiently.

Vendor Evaluation: Carefully evaluate cloud service providers based on factors like performance, security, pricing, and vendor lock-in before making a decision.

Conclusion

Mainframe modernization through the adoption of digital cloud services is a strategic imperative for organizations aiming to thrive in the digital age. By breaking free from the constraints of legacy mainframe systems and embracing the agility and scalability offered by the cloud, businesses can position themselves for long-term success and continued growth.

While challenges exist, a well-defined strategy, commitment to security, and adherence to best practices can pave the way for a successful mainframe modernization. In an era of rapid technological advancement, embracing the cloud isn't just an upgrade—it's a strategic transformation that can redefine how organizations operate, innovate, and remain competitive in a rapidly evolving digital landscape.

0 notes

Text

Mainframe Performance Optimization Techniques

Mainframe performance optimization is crucial for organizations relying on these powerful computing systems to ensure efficient and cost-effective operations. Here are some key techniques and best practices for optimizing mainframe performance:

1. Capacity Planning: Understand your workload and resource requirements. Accurately estimate future needs to allocate resources efficiently. This involves monitoring trends, historical data analysis, and growth projections.

2. Workload Management: Prioritize and allocate resources based on business needs. Ensure that critical workloads get the necessary resources while lower-priority tasks are appropriately throttled.

3. Batch Window Optimization: Efficiently schedule batch jobs to maximize system utilization. Minimize overlap and contention for resources during batch processing windows.

4. Storage Optimization: Regularly review and manage storage capacity. Employ data compression, data archiving, and data purging strategies to free up storage resources.

5. Indexing and Data Access: Optimize database performance by creating and maintaining efficient indexes. Tune SQL queries to minimize resource consumption and improve response times.

6. CICS and IMS Tuning: Tune your transaction processing environments like CICS (Customer Information Control System) and IMS (Information Management System) to minimize response times and resource utilization.

7. I/O Optimization: Reduce I/O bottlenecks by optimizing the placement of data sets and using techniques like buffering and caching.

8. Memory Management: Efficiently manage mainframe memory to minimize paging and maximize available RAM for critical tasks. Monitor memory usage and adjust configurations as needed.

9. CPU Optimization: Monitor CPU usage and identify resource-intensive tasks. Optimize code, reduce unnecessary CPU cycles, and consider parallel processing for CPU-bound tasks.

10. Subsystem Tuning: Mainframes often consist of various subsystems like DB2, z/OS, and MQ. Each subsystem should be tuned for optimal performance based on specific workload requirements.

11. Parallel Processing: Leverage parallel processing capabilities to distribute workloads across multiple processors or regions to improve processing speed and reduce contention.

12. Batch Processing Optimization: Optimize batch job execution by minimizing I/O, improving sorting algorithms, and parallelizing batch processing tasks.

13. Compression Techniques: Use compression algorithms to reduce the size of data stored on disk, which can lead to significant storage and I/O savings.

14. Monitoring and Performance Analysis Tools: Employ specialized tools and monitoring software to continuously assess system performance, detect bottlenecks, and troubleshoot issues in real-time.

15. Tuning Documentation: Maintain comprehensive documentation of configuration settings, tuning parameters, and performance benchmarks. This documentation helps in identifying and resolving performance issues effectively.

16. Regular Maintenance: Keep the mainframe software and hardware up-to-date with the latest patches and updates provided by the vendor. Regular maintenance can resolve known performance issues.

17. Training and Skill Development: Invest in training for your mainframe staff to ensure they have the skills and knowledge to effectively manage and optimize the system.

18. Cost Management: Consider the cost implications of performance tuning. Sometimes, adding more resources may be more cost-effective than extensive tuning efforts.

19. Capacity Testing: Conduct load and stress testing to evaluate how the mainframe handles peak workloads. Identify potential bottlenecks and make necessary adjustments.

20. Security Considerations: Ensure that performance optimizations do not compromise mainframe security. Balance performance improvements with security requirements.

Mainframe performance optimization is an ongoing process that requires constant monitoring and adjustment to meet evolving business needs. By implementing these techniques and best practices, organizations can maximize the value of their mainframe investments and ensure smooth and efficient operations.

#Mainframe Application#data management#Database management systems#Modern mainframe applications#cloud services

0 notes

Text

Digital Transformation Services

Your Switch to a Digital Future – Digital Transformation Consulting Services

Being a leading name amongst Digital Transformation Company and Service providers, Enterprise Mobility has been handholding enterprises on their Digital Transformation journeys for two decades now

#enterprise mobility#digital transformation#enterprise mobility management#enterprise mobility service#Mobility Strategy and Consulting#Custom Enterprise Mobile App#digital transformation service#digital transformation consulting#digital transformation company#Digital Transformation Consultation#Legacy Modernization#Legacy Modernization Services#Legacy App Modernization#Legacy Mainframe#Legacy Application Modernization#Legacy Application Maintenance#Legacy Application Management#App Modernization Services#Enterprise Application Modernization#IoT Development

1 note

·

View note

Text

The so-called Department of Government Efficiency (DOGE) is starting to put together a team to migrate the Social Security Administration’s (SSA) computer systems entirely off one of its oldest programming languages in a matter of months, potentially putting the integrity of the system—and the benefits on which tens of millions of Americans rely—at risk.

The project is being organized by Elon Musk lieutenant Steve Davis, multiple sources who were not given permission to talk to the media tell WIRED, and aims to migrate all SSA systems off COBOL, one of the first common business-oriented programming languages, and onto a more modern replacement like Java within a scheduled tight timeframe of a few months.

Under any circumstances, a migration of this size and scale would be a massive undertaking, experts tell WIRED, but the expedited deadline runs the risk of obstructing payments to the more than 65 million people in the US currently receiving Social Security benefits.

“Of course, one of the big risks is not underpayment or overpayment per se; [it’s also] not paying someone at all and not knowing about it. The invisible errors and omissions,” an SSA technologist tells WIRED.

The Social Security Administration did not immediately reply to WIRED’s request for comment.

SSA has been under increasing scrutiny from president Donald Trump’s administration. In February, Musk took aim at SSA, falsely claiming that the agency was rife with fraud. Specifically, Musk pointed to data he allegedly pulled from the system that showed 150-year-olds in the US were receiving benefits, something that isn’t actually happening. Over the last few weeks, following significant cuts to the agency by DOGE, SSA has suffered frequent website crashes and long wait times over the phone, The Washington Post reported this week.

This proposed migration isn’t the first time SSA has tried to move away from COBOL: In 2017, SSA announced a plan to receive hundreds of millions in funding to replace its core systems. The agency predicted that it would take around five years to modernize these systems. Because of the coronavirus pandemic in 2020, the agency pivoted away from this work to focus on more public-facing projects.

Like many legacy government IT systems, SSA systems contain code written in COBOL, a programming language created in part in the 1950s by computing pioneer Grace Hopper. The Defense Department essentially pressured private industry to use COBOL soon after its creation, spurring widespread adoption and making it one of the most widely used languages for mainframes, or computer systems that process and store large amounts of data quickly, by the 1970s. (At least one DOD-related website praising Hopper's accomplishments is no longer active, likely following the Trump administration’s DEI purge of military acknowledgements.)

As recently as 2016, SSA’s infrastructure contained more than 60 million lines of code written in COBOL, with millions more written in other legacy coding languages, the agency’s Office of the Inspector General found. In fact, SSA’s core programmatic systems and architecture haven’t been “substantially” updated since the 1980s when the agency developed its own database system called MADAM, or the Master Data Access Method, which was written in COBOL and Assembler, according to SSA’s 2017 modernization plan.

SSA’s core “logic” is also written largely in COBOL. This is the code that issues social security numbers, manages payments, and even calculates the total amount beneficiaries should receive for different services, a former senior SSA technologist who worked in the office of the chief information officer says. Even minor changes could result in cascading failures across programs.

“If you weren't worried about a whole bunch of people not getting benefits or getting the wrong benefits, or getting the wrong entitlements, or having to wait ages, then sure go ahead,” says Dan Hon, principal of Very Little Gravitas, a technology strategy consultancy that helps government modernize services, about completing such a migration in a short timeframe.

It’s unclear when exactly the code migration would start. A recent document circulated amongst SSA staff laying out the agency’s priorities through May does not mention it, instead naming other priorities like terminating “non-essential contracts” and adopting artificial intelligence to “augment” administrative and technical writing.

Earlier this month, WIRED reported that at least 10 DOGE operatives were currently working within SSA, including a number of young and inexperienced engineers like Luke Farritor and Ethan Shaotran. At the time, sources told WIRED that the DOGE operatives would focus on how people identify themselves to access their benefits online.

Sources within SSA expect the project to begin in earnest once DOGE identifies and marks remaining beneficiaries as deceased and connecting disparate agency databases. In a Thursday morning court filing, an affidavit from SSA acting administrator Leland Dudek said that at least two DOGE operatives are currently working on a project formally called the “Are You Alive Project,” targeting what these operatives believe to be improper payments and fraud within the agency’s system by calling individual beneficiaries. The agency is currently battling for sweeping access to SSA’s systems in court to finish this work. (Again, 150-year-olds are not collecting social security benefits. That specific age was likely a quirk of COBOL. It doesn’t include a date type, so dates are often coded to a specific reference point—May 20, 1875, the date of an international standards-setting conference held in Paris, known as the Convention du Mètre.)

In order to migrate all COBOL code into a more modern language within a few months, DOGE would likely need to employ some form of generative artificial intelligence to help translate the millions of lines of code, sources tell WIRED. “DOGE thinks if they can say they got rid of all the COBOL in months, then their way is the right way, and we all just suck for not breaking shit,” says the SSA technologist.

DOGE would also need to develop tests to ensure the new system’s outputs match the previous one. It would be difficult to resolve all of the possible edge cases over the course of several years, let alone months, adds the SSA technologist.

“This is an environment that is held together with bail wire and duct tape,” the former senior SSA technologist working in the office of the chief information officer tells WIRED. “The leaders need to understand that they’re dealing with a house of cards or Jenga. If they start pulling pieces out, which they’ve already stated they’re doing, things can break.”

260 notes

·

View notes

Text

My theory about why this has happened digital technology is that this shift in the past ten years to pure rent-seeking is driven by the end of Moore's law. Basically it's an observation/projection that the amount of transistors you can fit on a single computer chip will double every two years. And that means you can create computers that are smaller but also more powerful. This observation held true for decades. It enabled us to go from the only computers being huge mainframes run by institutions to personal computers, then to easily portable computers like laptops and eventually in the 00s to smartphones. And it means that now we can carry around in our pocket a computer that is vastly more powerful than the most powerful and biggest supercomputers of the 1960s.

But Moore's law is definitely dying since at least 2010 and is probably already dead for about a decade. But capitalism requires eternal growth, more profit every year. And previously the tech industry could sustain that kind of growth naturally due to the rapid technological development charted by moore's law. Computers were really getting better every year, enabling more people to buy them and driving sales up.

And now the tech industry can't do that anymore, with moore's law being dead. But the tech companies still need to raise profits, and promise growing profits forever, but without the technological innovations to back it up. They have to fill the gap left by plateauing advances in microchip development somehow.

And what's left is various forms of bullshit. Stuff that doesn't give the enduser more value for their money and time but makes the shareholders more money, or at least promises to. Planned obsolescence to drive purchases of new hardware when they no longer can be sold on the basis of being meaningfully better than the old hardware. Squeezing more money from an existing customer base with more and more subscription services. More advertising everywhere. Hype about miracle technologies that will create massive profits like the blockchain and generative AI to fool investors into investing (the AI bubble is already bursting.). And that's the state of the modern tech industry. It doesn't help that investors are more cautious now that interest rates have been hiked.

113K notes

·

View notes

Text

CloudHub BV: Unlocking Business Potential with Advanced Cloud Integration and AI

Introduction

At the helm of CloudHub BV is Susant Mallick, a visionary leader whose expertise spans over 23 years in IT and digital transformation diaglobal. Under his leadership, CloudHub excels in integrating cloud architecture and AI-driven solutions, helping enterprises gain agility, security, and actionable insights from their data.

Susant Mallick: Pioneering Digital Transformation

A Seasoned Leader

Susant Mallick earned his reputation as a seasoned IT executive, serving roles at Cognizant and Amazon before founding CloudHub . His leadership combines technical depth — ranging from mainframes to cloud and AI — with strategic vision.

Building CloudHub BV

In 2022, Susant Mallick launched CloudHub to democratize data insights and accelerate digital journeys timeiconic. The company’s core mission: unlock business potential through intelligent cloud integration, data modernization, and integrations powered by AI.

Core Services Under Susant Mallick’s Leadership

Cloud & Data Engineering

Susant Mallick positions CloudHub as a strategic partner across sectors like healthcare, BFSI, retail, and manufacturing ciobusinessworld. The company offers end-to-end cloud migration, enterprise data engineering, data governance, and compliance consulting to ensure scalability and reliability.

Generative AI & Automation

Under Susant Mallick, CloudHub spearheads AI-led transformation. With services ranging from generative AI and intelligent document processing to chatbot automation and predictive maintenance, clients realize faster insights and operational efficiency.

Security & Compliance

Recognizing cloud risks, Susant Mallick built CloudHub’s CompQ suite to automate compliance tasks — validating infrastructure, securing access, and integrating regulatory scans into workflows — enhancing reliability in heavily regulated industries .

Innovation in Data Solutions

DataCube Platform

The DataCube, created under Susant Mallick’s direction, accelerates enterprise data platform deployment — reducing timelines from months to days. It includes data mesh, analytics, MLOps, and AI integration, enabling fast access to actionable insights

Thinklee: AI-Powered BI

Susant Mallick guided the development of Thinklee, an AI-powered business intelligence engine. Using generative AI, natural language queries, and real-time analytics, Thinklee redefines BI — let users “think with” data rather than manually querying it .

CloudHub’s Impact Across Industries

Healthcare & Life Sciences

With Susant Mallick at the helm, CloudHub supports healthcare innovations — from AI-driven diagnostics to advanced clinical workflows and real-time patient engagement platforms — enhancing outcomes and operational resilience

Manufacturing & Sustainability

CloudHub’s data solutions help manufacturers reduce CO₂ emissions, optimize supply chains, and automate customer service. These initiatives, championed by Susant Mallick, showcase the company’s commitment to profitable and socially responsible innovation .

Financial Services & Retail

Susant Mallick oversees cloud analytics, customer segmentation, and compliance for BFSI and retail clients. Using predictive models and AI agents, CloudHub helps improve personalization, fraud detection, and process automation .

Thought Leadership & Industry Recognition

Publications & Conferences

Susant Mallick shares his insights through platforms like CIO Today, CIO Business World, LinkedIn, and Time Iconic . He has delivered keynotes at HLTH Europe and DIA Real‑World Evidence conferences, highlighting AI in healthcare linkedin.

Awards & Accolades

Under Susant Mallick’s leadership, CloudHub has earned multiple awards — Top 10 Salesforce Solutions Provider, Tech Entrepreneur of the Year 2024, and IndustryWorld recognitions, affirming the company’s leadership in digital transformation.

Strategic Framework: CH‑AIR

GenAI Readiness with CH‑AIR

Susant Mallick introduced the CH‑AIR (CloudHub GenAI Readiness) framework to guide organizations through Gen AI adoption. The model assesses AI awareness, talent readiness, governance, and use‑case alignment to balance innovation with measurable value .

Dynamic and Data-Driven Approach

Under Susant Mallick, CH‑AIR provides a data‑driven roadmap — ensuring that new AI and cloud projects align with business goals and deliver scalable impact.

Vision for the Future

Towards Ethical Innovation

Susant Mallick advocates for ethical AI, governance, and transparency — encouraging enterprises to implement scalable, responsible technology. CloudHub promotes frameworks for continuous data security and compliance across platforms.

Scaling Global Impact

Looking ahead, Susant Mallick plans to expand CloudHub’s global footprint. Through technology partnerships, enterprise platforms, and new healthcare innovations, the goal is to catalyze transformation worldwide.

Conclusion

Under Susant Mallick’s leadership, CloudHub BV redefines what cloud and AI integration can achieve in healthcare, manufacturing, finance, and retail. From DataCube to Thinklee and the CH‑AIR framework, the organization delivers efficient, ethical, and high-impact digital solutions. As business landscapes evolve, Susant Mallick and CloudHub are well-positioned to shape the future of strategic, data-driven innovation.

0 notes

Text

Kyndryl Offers Mainframe Modernization Services Leveraging AWS? Agentic AI

http://securitytc.com/TLK6mq

0 notes

Text

VRNexGen: Your Trusted Partner in Mainframe Modernization

VRNexGen is a leading technology consulting firm specializing in mainframe modernization. With a deep understanding of legacy systems and a forward-thinking approach, we assist organizations in transforming their mainframe environments to meet the demands of today's digital landscape.

#mainframe modernization#Mainframe Infrastructure Upgrade#Legacy System Modernization#Mainframe Transformation Partner#Mainframe Modernization Services#VRNexGen

1 note

·

View note

Text

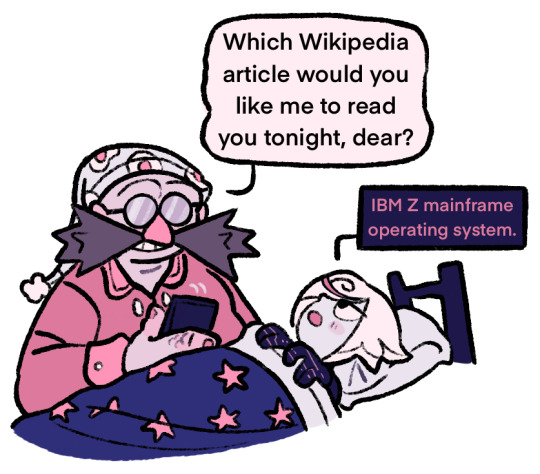

Like OS/390, z/OS combines a number of formerly separate, related products, some of which are still optional. z/OS has the attributes of modern operating systems but also retains much of the older functionality originated in the 1960s and still in regular use—z/OS is designed for backward compatibility.

Major characteristics

z/OS supports[NB 2] stable mainframe facilities such as CICS, COBOL, IMS, PL/I, IBM Db2, RACF, SNA, IBM MQ, record-oriented data access methods, REXX, CLIST, SMP/E, JCL, TSO/E, and ISPF, among others.

z/OS also ships with a 64-bit Java runtime, C/C++ compiler based on the LLVM open-source Clang infrastructure,[3] and UNIX (Single UNIX Specification) APIs and applications through UNIX System Services – The Open Group certifies z/OS as a compliant UNIX operating system – with UNIX/Linux-style hierarchical HFS[NB 3][NB 4] and zFS file systems. These compatibilities make z/OS capable of running a range of commercial and open source software.[4] z/OS can communicate directly via TCP/IP, including IPv6,[5] and includes standard HTTP servers (one from Lotus, the other Apache-derived) along with other common services such as SSH, FTP, NFS, and CIFS/SMB. z/OS is designed for high quality of service (QoS), even within a single operating system instance, and has built-in Parallel Sysplex clustering capability.

Actually, that is wayyy too exciting for a bedtime story! I remember using the internet on a unix terminal long before the world wide web. They were very excited by email, but it didn't impress me much. Browers changed the world.

23K notes

·

View notes

Text

Kyndryl, AWS unwrap AI-driven mainframe migration service

Kyndryl is tapping into agentic AI technology from AWS to offer enterprise customers another way to integrate with or move mainframe applications to the AWS Cloud platform. Specifically, Kyndryl will offer an implementation service based on the recently announced AWS Transform package, which analyzes mainframe code bases, decomposes them into domains, and modernizes IBM z/OS applications to Java…

0 notes

Text

Explore modern mainframe migration strategies for a future-ready IT landscape. Embrace agility, cost-efficiency, and innovation.

0 notes

Text

How Custom APIs Can Bridge Your Old Systems with Modern Tools

In an era of rapid digital transformation, companies face mounting pressure to stay agile, integrated, and scalable. Yet, for many businesses, legacy systems—software built decades ago—remain deeply embedded in their day-to-day operations. While these systems often house critical data and workflows, they are frequently incompatible with modern tools, cloud services, and mobile apps.

This is where custom APIs (Application Programming Interfaces) come in. Rather than rip and replace your existing systems, custom APIs offer a powerful and cost-effective way to connect legacy infrastructure with modern applications, extending the life and value of your current technology stack.

In this blog, we explore how custom APIs bridge the gap between the old and new, and why partnering with a custom software development company in New York is the key to unlocking this integration.

Understanding the Legacy Dilemma

Legacy systems are often mission-critical, but they can be:

Built on outdated technologies

Poorly documented

Incompatible with cloud-native tools

Rigid and difficult to scale

Lacking modern security standards

Despite their limitations, many organizations can’t afford to replace these systems outright. The risks of downtime, data loss, and cost overruns are simply too high. The solution? Wrap them in custom APIs to make them work like modern systems—without the need for complete redevelopment.

What Are Custom APIs?

A custom API is a tailored interface that allows software systems—regardless of their age or architecture—to communicate with each other. APIs translate data and commands into a format that both the old system and the new tools can understand.

With a well-designed API, you can:

Expose data from a legacy database to a web or mobile app

Allow cloud tools like Salesforce, Slack, or HubSpot to interact with your internal systems

Enable real-time data sharing across departments and platforms

Automate manual workflows that previously required human intervention

By working with a software development company in New York, you can create secure, scalable APIs that connect your most valuable legacy systems with cutting-edge technologies.

Why Modern Businesses Need Custom APIs

1. Avoid Expensive System Replacements

Rebuilding or replacing legacy systems is expensive, time-consuming, and risky. Custom APIs offer a lower-cost, lower-risk alternative by extending system capabilities without touching the core.

Companies that work with custom software development companies in New York can protect their existing investments while gaining modern functionality.

2. Enable Integration with Cloud and SaaS Platforms

Most modern SaaS platforms rely on APIs for connectivity. Custom APIs allow legacy systems to seamlessly:

Push data into cloud CRMs or ERPs

Trigger automated workflows via platforms like Zapier or Make

Sync with eCommerce, finance, or HR software

This means your old software can now "talk" to cutting-edge services—without needing a full migration.

3. Boost Business Agility

In today's fast-paced environment, agility is a competitive advantage. APIs let you innovate quickly by connecting existing systems to new apps, dashboards, or devices. This flexibility makes it easier to adapt to new customer demands or market trends.

That’s why the top software development companies in New York prioritize API-first development in digital transformation strategies.

Real-World Use Cases: Bridging Old and New with APIs

1. Legacy ERP + Modern BI Tools

A manufacturing firm uses a 20-year-old ERP system but wants modern analytics. A custom software development company in New York creates APIs that extract relevant data and feed it into Power BI or Tableau for real-time dashboards—no need to rebuild the ERP.

2. Mainframe + Mobile Application

A financial services provider wants to offer customers a mobile app without replacing its mainframe. A custom API acts as the bridge, securely transmitting data between the mobile frontend and the back-end system.

3. CRM Integration

A healthcare provider uses a legacy patient database but wants to sync data with Salesforce. Custom APIs enable bidirectional integration, allowing staff to use Salesforce without duplicating data entry.

Key Features of a High-Quality Custom API

When you work with a custom software development company in New York, you can expect your API to be:

1. Secure

APIs are a potential attack vector. A quality solution includes:

Token-based authentication (OAuth 2.0)

Role-based access control

Encryption in transit and at rest

Audit logs and usage tracking

2. Scalable

Your API must be able to handle increased traffic as your business grows. Proper load balancing, caching, and rate limiting ensure performance under load.

3. Well-Documented

Clear documentation ensures your development teams and third-party vendors can integrate efficiently. OpenAPI/Swagger standards are often used for this purpose.

4. Versioned and Maintainable

APIs must be version-controlled to allow for upgrades without breaking existing integrations. A skilled software development company in New York will build a future-proof architecture.

Custom APIs vs Middleware: What’s the Difference?

Some businesses confuse APIs with middleware. While both can connect systems:

Middleware is a more generalized layer that handles communication between systems

Custom APIs are specifically designed for a particular integration or business need

Custom APIs are more flexible, lightweight, and tailored to your workflow—making them ideal for companies that need precise, scalable connections.

Why Work with a Custom Software Development Company in New York?

Building effective APIs requires a deep understanding of both legacy systems and modern tech stacks. Here’s why businesses trust custom software development companies in New York:

Local knowledge of regulatory requirements, compliance, and market needs

Time zone alignment for seamless collaboration

Experience with complex integrations across industries like finance, healthcare, logistics, and retail

Access to top-tier engineering talent for scalable, secure API architecture

Partnering with the best software development company in New York ensures your integration project is delivered on time, within budget, and aligned with your long-term business goals.

The Role of API Management Platforms

For businesses dealing with multiple APIs, platforms like MuleSoft, Postman, or Azure API Management provide centralized control. A top software development company in New York can help you:

Monitor performance and uptime

Control access and permissions

Analyze usage metrics

Create scalable microservices environments

Conclusion: Modernization Without Disruption

Legacy systems don’t have to hold you back. With the right strategy, they can be revitalized and connected to the tools and platforms that power modern business. Custom APIs act as bridges, allowing you to preserve what works while embracing what’s next.

For businesses in New York looking to modernize without massive overhauls, partnering with a trusted custom software development company in New York like Codezix is the smartest move. From planning and development to deployment and support, Codezix builds the API solutions that connect your old systems with tomorrow’s innovation.

Looking to integrate your legacy systems with modern platforms? Let Codezix, a top software development company in New York, create powerful custom APIs that enable secure, scalable transformation—without starting from scratch.

Know morehttps://winklix.wixsite.com/winklix/single-post/custom-software-vs-saas-which-is-more-secure

#custom software development company in new york#software development company in new york#custom software development companies in new york#top software development company in new york#best software development company in new york#How Custom APIs Can Bridge Your Old Systems with Modern Tools

0 notes

Text

It's honestly pretty wild that my beloved Art Nouveau toilet paper holder has been in service since at least 1906 (and functions better than anything made in my lifetime as well as being stunningly beautiful), and my stove has been in service since at least 1954 (with more features and beauty Truman modern stoves), and the first CD I bought in 1995 still plays, but there are so many games from my childhood which profoundly shaped me and I will never be able to play them again. I can't even find screenshots for many of them.

I have less than a year to decide between:

"Upgrading" to Windows 11 (which I loathe, and won't run all my software).

Trying to switch back to Linux again (which won't run all my software, and has eventually frustrated me back to using Windows 4 times over 20 years).

Permanently take my desktop offline (which won't run all my software, and be basically useless).

Keep Windows 10 and just rawdog using the malware-ad filled modern internet with no security patches ever again (worked IT helpdesk with an interest in infosec for too many years for this to not give me heart palpitations).

In the 1970s-1980s my dad worked as a backend systems programmer for a major bank on IBM mainframes. They wrote everything themselves in Assembly Language. In the 1980s he wrote a utility program with a date function that got widely used, and had the foresight to think "This could still be in use far into the future, so I better use a 4-digit date." It was still in use in 2000, and as a result the bank has to do very few Y2K upgrades to its backend systems.

In 2012, an old friend who still worked there for so frustrated at contractors saying they couldn't speed up some network login library feature because their preferred modern programming language didn't support it. It was taking over an hour to run. They didn't seem to believe something more efficient had ever been possible.

Finally out of frustration, that guy broke out Dad's old utility (that also processed partitioned data sets) had a and wrote a working demo. It maxed out the entire modern mainframe CPU, but accomplished the task in 1 min 15 seconds. It wasn't put back into production, of course, but it did effectively make the point that the specs were not unreasonable and if the fancy new programming language couldn't do it, then use another damn language that does work.

I did IT in a biolab a decade ago that still had Windows XP computers because it was the only operating system that could run the proprietary software to control the $20k microscopes. Which worked perfectly fine and we didn't have the budget to replace. They had to be on the network because the sneakernet violates biohazard lab safety rules, and there weren't enough modern computers in the lab to sneakernet the files through those without waiting for someone else to finish using it, and no one's work could afford the delays. I left before we fully solved that one, but a lot of firewall rules were involved (if we ever lost the install CDs we were fully fucked because the microscope company went out of business at least a decade earlier).

So yeah, the old magic persists because it worked perfectly fine and it's stupid capitalist planned obsolescence that convinces people the old magic is obsolete. We could actually just keep patching perfectly serviceable orbs forever if we valued ongoing maintenance.

“The old magic persists thanks to it’s unfathomable power.”

No, the old magic persists because the new magic can’t run the legacy spells I need to do my job, and keeps trying to install spirits I don’t want or need onto my orb.

60K notes

·

View notes

Text

Addressing a single executive order from Donald Trump’s voluminous first-day edicts is like singling out one bullet in a burst from an AK-47. But one of them hit me in the gut. That is “Establishing and Implementing the President’s Department of Government Efficiency.’’ The acronym for that name is DOGE (named after a memecoin), and it’s the Elon Musk–led effort to cut government spending by a trillion bucks or two. Though DOGE was, until this week, pitched as an outside body, this move makes it an official part of government—by embedding it in an existing agency that was formerly part of the Office of Management and Budget called the United States Digital Service. The latter will now be known as the US DOGE Service, and its new head will be more tightly connected to the president, reporting to his chief of staff.

The new USDS will apparently shift its former laser focus on building cost-efficient and well-designed software for various agencies to a hardcore implementation of the Musk vision. It’s kind of like a government version of a SPAC, the dodgy financial maneuver that launched Truth Social in the public market without ever having to reveal a coherent business plan to underwriters.

The order is surprising in a sense because, on its face, DOGE seems more limited than its original super ambitious pitch. This iteration seems more tightly centered on saving money through streamlining and modernizing the government’s massive and messy IT infrastructure. There are big savings to be had, but a handful of zeros short of trillions. As of yet, it’s uncertain whether Musk will become the DOGE administrator. It doesn’t seem big enough for him. (The first USDS director, Mikey Dickerson, jokingly posted on LinkedIn, “I’d like to congratulate Elon Musk on being promoted to my old job.”) But reportedly Musk pushed for this structure as a way to embed DOGE in the White House. I hear that inside the Executive Office Building, there are numerous pink Post-it notes claiming space even beyond USDS’s turf, including one such note on the former chief information officers’ enviable office. So maybe this could be a launch pad for a more sweeping effort that will eliminate whole agencies and change policies. (I was unable to get a White House representative to answer questions, which isn’t surprising considering that there are dozens of other orders that equally beg for explanation.)

One thing is clear—this ends United States Digital Service as it previously existed, and marks a new, and maybe perilous era for the USDS, which I have been enthusiastically covering since its inception. The 11-year-old agency sprang out of the high-tech rescue squad salvaging the mess that was Healthcare.gov, the hellish failure of a website that almost tanked the Affordable Care Act. That intrepid team of volunteers set the template for the agency: a small group of coders and designers who used internet-style techniques (cloud not mainframe; the nimble “agile” programming style instead of the outdated “waterfall” technique) to make government tech as nifty as the apps people use on their phones. Its soldiers, often leaving lucrative Silicon Valley jobs, were lured by the prospect of public service. They worked out of the agency’s funky brownstone headquarters on Jackson Place, just north of the White House. The USDS typically took on projects that were mired in centi-million contracts and never completed—delivering superior results within weeks. It would embed its employees in agencies that requested help, being careful to work collaboratively with the lifers in the IT departments. A typical project involved making DOD military medical records interoperable with the different systems used by the VA. The USDS became a darling of the Obama administration, a symbol of its affiliation with cool nerddom.

During the first Trump administration, deft maneuvering kept the USDS afloat—it was the rare Obama initiative that survived. Its second-in-command, Haley Van Dyck, cleverly got buy-in from Trump’s in-house fixer, Jared Kushner. When I went to meet Kushner for an off-the-record talk early in 2017, I ran into Van Dyck in the West Wing; she gave me a conspiratorial nod that things were looking up, at least for the moment. Nonetheless, the four Trump years became a balancing act in sharing the agency’s achievements while somehow staying under the radar. “At Disney amusement parks, they paint things that they want to be invisible with this certain color of green so that people don't notice it in passing,” one USDSer told me. “We specialized in painting ourselves that color of green.” When Covid hit, that became a feat in itself, as USDS worked closely with White House coronavirus response coordinator Deborah Birx on gathering statistics—some of which the administration wasn’t eager to publicize.

By the end of Trump’s term, the green paint was wearing thin. A source tells me that at one point a Trump political appointee noticed—not happily—that USDS was recruiting at tech conferences for lesbians and minorities, and asked why. The answer was that it was an effective way to find great product managers and designers. The appointee accepted that but asked if, instead of putting “Lesbians Who Tech” on the reimbursement line, could they just say LWT?

Under Biden no subterfuge was needed—the USDS thrived. But despite many months of effort, it could not convince Congress to give it permanent funding. With the return of Trump, and his promises to cut government spending, there was reason to think that USDS would evaporate. That’s why the DOGE move is kind of bittersweet—at least it now has more formal recognition and ostensibly will get a reliable budget line.

How will the integration work? The executive order mandates that in addition to normal duties the USDS director will also head a temporary organization “dedicated to advancing the President’s 18-month DOGE agenda.” That agenda is not clearly defined, but elsewhere the order speaks of improving the quality and efficiency of government-wide software, systems, and infrastructure. More specific is the mandate to embed four-person teams inside every agency to help realize the DOGE agenda. The order is very explicit that the agency must provide “full and prompt access to all unclassified agency records, software systems, and IT systems.” Apparently Musk is obsessed with an unprecedented centralization of the data that makes the government go—or not. This somewhat adversarial stance is a dramatic shift from the old USDS MO of working collegially with the lifers inside the agencies.

Demanding all that data might be a good thing. Clare Martorana, who until last week was the nation’s chief information officer, says that while she saw many victories during her eight years in government tech, making big changes has been tough, in large part because of the difficulty of getting such vital data. “We have budget data that is incomprehensible,” she says. “The agency understands it, but they hide money in all kinds of places, so no one can really get a 100,000-foot view. How many open positions do they have? What are the skill sets? What are their top contracts? When are they renegotiating their most important contracts? How much do they spend on operations and maintenance versus R&D or innovation? You should know all these things.” If DOGE gets that information and uses it well, it could be transformational. “Through self-reporting, we spend $120 billion on IT,” she says “If we found all the hidden money and shadow IT, it's $200, $300, maybe $500 billion. We lose a lot of money on technology we buy stupidly, and we don't deliver services to the American public that they deserve.” So this Trump effort could be a great thing? “I’m trying very hard to be optimistic about it,” says Martorana. The USDS’s outgoing director, Mina Hsiang, is also trying to be upbeat. “I think there's a tremendous opportunity,” she says. “ I don't know what [DOGE] will do with it, but I hope that they listen to a lot of great folks who are there.”

On the other hand, those four-person teams could be a blueprint for mayhem. Up until now, USDS would send only engineers and designers into agencies, and their focus was to build things and hopefully set an example for the full-timers to do work like they do at Google or Amazon. The EO dictates only one engineer in a typical four-person team, joined by a lawyer (not known for building stuff), an HR person (known for firing people), and a “team lead” whose job description sounds like a political enforcer: “implementing the president’s DOGE Agenda.” I know that’s a dark view, but Elon Musk —and his new boss—are no strangers to clearing out a workplace. Maybe they’ll figure AI can do things better.

Whichever way it goes, the original Obama-era vibes of the USDS may forever be stilled—to be superseded by a different kind of idealist in MAGA garb. As one insider told me, “USDS leadership is pretty ill equipped to navigate the onslaught of these DOGE guys, and they are going to get the shit kicked out of them.” Though not perfect, the USDS has by dint of hard work, mad skills, and corny idealism, made a difference. Was there really a need to embed the DOGE experiment into an agency that was doing good? And what are the odds that on July 4, 2026, when the “temporary” DOGE experiment is due to end, the USDS will sunset as well? At best, the new initiative might help unravel the near intractable train wreck that is government IT. But at worst, the integration will be like a greedy brain worm wreaking havoc on its host.

8 notes

·

View notes

Text

Global Wind Turbine Casting Market Set for 11.3% CAGR Through 2031

Wind turbine casting constitutes the backbone of modern wind-energy generation, supplying essential components hubs, mainframes, gearbox casings, towers, and internal parts such as rotor shafts that must withstand extreme loads and harsh environments over 20-year lifespans. In 2022, the global wind turbine casting market was valued at US$ 2.4 Bn. Driven by surging installation of onshore and offshore wind farms, elevated government incentives for clean energy, and utilities’ decarbonization targets, the market is projected to expand at an 11.3% CAGR from 2023 to 2031, reaching US$ 6.4 Bn by decade’s end.

Market Drivers & Trends

Government Support & Regulatory Push: Subsidies, tax credits, and renewable-energy mandates in Europe, North America, and Asia Pacific are accelerating wind-turbine deployments, swelling demand for cast components.

Scale-up of Turbine Capacities: The shift toward 10 MW+ turbines particularly offshore requires ever-larger, high-integrity castings, boosting per-unit casting revenue.

Decarbonization & ESG Focus: Utilities and industrial end-users are prioritizing zero-carbon electricity, favoring wind power over coal and gas.

Technological Innovations: Advances in sand-casting automation, 3D-printed molds, and novel alloys (e.g., high-strength duplex stainless steel) are improving component fatigue life and reducing lead times.

Latest Market Trends

Modular Casting Lines: Manufacturers are deploying automated, modular casting cells capable of running multiple mold types in parallel—speeding up tool changeover and cutting per-unit costs.

Lightweight Aluminum Alloys: A growing share of hubs and small nacelle parts is shifting to aluminum for weight reduction—enabling longer blades and higher capacity factors.

Digital Twins & Quality Inspection: AI-driven inspection systems and digital-twin simulations are minimizing scrap rates and ensuring consistent tolerances in complex geometries.

Green Casting Processes: Producers are adopting low-VOC binders and recycling foundry sand to reduce the carbon footprint of casting operations.

Key Players and Industry Leaders

RIYUE HEAVY INDUSTRY CORPORATION.LTD

DALIAN HUARUI HEAVY INDUSTRY

Hedrich

ENERCON

Inox Wind Limited

Shandong Longma Heavy

Simplex Castings

WALZENGIESSEREI

Silbitz Group

MIKROMAT GmbH

Siempelkamp Giesserei

Mingyang Smart Energy Group Co. Ltd.

Juwi Holding AG

DYNAQUIP ENGINEERS

Jiangsu Xihua Inc.

Others

Recent Developments

February 2023 (DHHI): Successfully rolled out cast hub and mainframe for 18 MW offshore units—each part exceeding 6.5 m in height and 70 t in weight—marking a milestone in casting large structures with zero porosity.

June 2022 (Mingyang Smart Energy): Introduced the MySE 12 MW hybrid-drive turbine; castings integrated advanced cooling channels and high-strength alloys to endure super-typhoon wind speeds exceeding 78.8 m/s.

Q1 2024 (Various OEMs): Piloted the first continuous-casting line dedicated to wind turbine shafts, doubling throughput and slashing energy consumption per tonne of steel by 20%.

Get a concise overview of key insights from our Report in this sample – https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=3455

Market Opportunities

Emerging Offshore Markets: Rapid expansion of offshore wind in the U.S. (East Coast leases), Taiwan, and Vietnam offers greenfield opportunities for large-scale casting facilities.

Aftermarket & Spare Parts: Growth in installed base will fuel a surge in replacement castings—gearbox housings, hubs, and crankshafts—with shorter lead-time requirements and premium pricing.

Service Centers & Localized Production: On-shore foundries near major wind-farm clusters can capture fast-turn revenue and lower logistics costs, appealing to OEMs seeking agile supply chains.

Future Outlook

By 2031, continuous automation, process optimization, and alloy innovations will push unit production times down by 25% and elevate casting yield rates above 95%. As turbines grow in diameter and capacity, foundries capable of handling single-piece castings over 80 t will dominate. Simultaneously, circular-economy practices—remanufacturing, alloy recycling, and green-binder adoption—will become standard, aligning with net-zero commitments. Strategic tie-ups between turbine OEMs and casting specialists are likely to proliferate, ensuring supply security and joint R&D for next-generation materials.

Market Segmentation

Segment

Sub-Segments

Type

Horizontal Axis; Vertical Axis

Casting Technology

Sand Casting; Chill Casting; Others

Component

Rotor Blades; Rotor Hubs; Axle Pin; Rotor Shaft; Gearbox; Tower; Others

Application

Onshore; Offshore

Region

North America; Europe; Asia Pacific; MEA; Latin America

Regional Insights

Asia Pacific: Held the largest share in 2022, led by China’s mega-parks and India’s offshore pilot projects. Local foundries are scaling up to meet domestic demand and export to SEA.

North America: Ranks second; U.S. stimulus under the Inflation Reduction Act is driving record onshore and offshore procurement, with key casting hubs in the Gulf Coast region.

Europe: Mature market focusing on next-gen materials and circular foundry practices; Germany and Denmark remain casting innovation hotspots.

Latin America & MEA: Emerging markets with nascent wind sectors; first wave of onshore farms in Brazil and South Africa will create greenfield casting requirements.

Why Buy This Report?

Detailed 2020–2022 Historical Analysis: Quantitative data and trend drivers mapped to key macro events.

Comprehensive Segment & Country Coverage: Deep-dive into 20+ countries with market forecasts by casting type, component, and application.

Competitive Landscape: Profiles of 20+ manufacturers, including financials, strategies, and new product launches.

Proprietary Models & Assumptions: Transparent CAGR calculations and scenario analyses under varying policy regimes.

Value-Added Tools: Excel model for “what-if” sensitivity analyses and interactive PDF for on-the-fly data filtering.

Frequently Asked Questions

What is driving the high CAGR in wind turbine castings? Surge in offshore and onshore wind installations, government subsidies, and shift toward larger turbine platforms.

How are castings evolving to meet new turbine designs? Adoption of advanced alloys, automated molding, and digital-twin quality controls are enabling complex, high-integrity geometries.

Which regions offer the fastest growth? Asia Pacific leads, followed by North America especially offshore projects under recent clean-energy legislation.

How significant is the aftermarket segment? Replacement parts represent 20–25% of annual casting revenues, poised to grow with expanding turbine fleet.

Does the report cover supply-chain risks? Yes, including analysis of raw-material dependencies, logistics bottlenecks, and alternative alloy sourcing.

Explore Latest Research Reports by Transparency Market Research:

Bioheat Fuel Market: https://www.transparencymarketresearch.com/bioheat-fuel-market.html

Wind Turbine Decommissioning Market: https://www.transparencymarketresearch.com/wind-turbine-decommissioning-market.html

FPSO Pump Market: https://www.transparencymarketresearch.com/fpso-pump-market.html

Well Intervention Market: https://www.transparencymarketresearch.com/well-intervention-market.html About Transparency Market Research Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact: Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Website: https://www.transparencymarketresearch.com Email: [email protected]

0 notes